Linus Torvalds on Intel And AMDs New Approaches To Interrupt And Exception Handling - And Microkernels

AMD and Intel are both working on new standards for handling interrupts and exceptions on x86-64 processors. AMD is proposing a set of new "Supervisor Entry" extensions as a band-aid to the current interrupt descriptor table event handling system. Intel wants to throw that whole legacy system away and start over with a fundamentally different "Flexible Return and Event Delivery" (FRED) system. Linux-architect Linus Torvalds weighted in on the merits of both approaches a few weeks ago.

written by 윤채경 (Yoon Chae-kyung) 2021-03-30 - last edited 2021-04-10. © CC BY

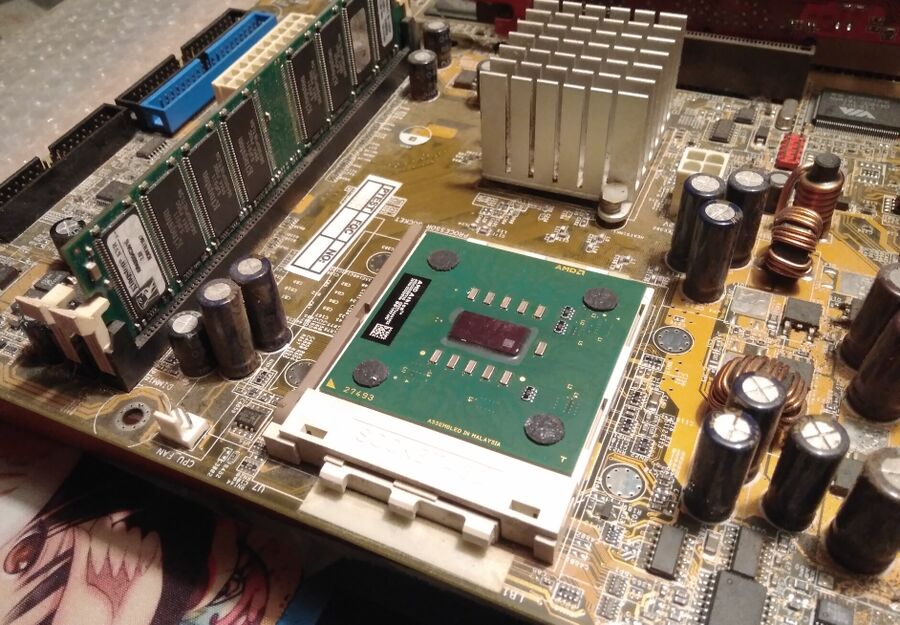

An AMD Athlon XP 3000+ (AXDA30000DKV4D) processor socketed on a ASUS A7V8X-LA motherboard.

Modern x86-64 processors have some oddities and outright flaws in the way they handle interrupts and exceptions. Those oddities are not new, the interrupt descriptor table is part of a legacy that goes back all the way to the 16-bit i80286 processors launched by Intel on February 1st, 1982. Many of the basic design principles from those days, nearly 40 years ago, are still there in modern x86-64 processors.

AMD and Intel have both proposed better ways of doing interrupt and exception handling the last few months. AMD published one titled "AMD Supervisor Entry Extensions" in February, and Intel published a very different one titled Flexible Return and Event Delivery (FRED) in March.

This is how AMD explains how they see the problem in their AMD Supervisor Entry Extensions proposal:

"The SYSCALL and SYSRET instructions do not atomically switch all CPU state necessary to transition a modern OS between user and supervisor mode. After SYSCALL entry, a typical OS requires performing a SWAPGS instruction and loading a kernel stack pointer, and potentially a shadow stack pointer, before the kernel can process interrupts and exceptions. While SYSCALL clears IF blocking normal interrupts, it is still possible for non-maskable interrupts (NMIs) tooccur. Additionally, certain types of x86 exceptions may occur during this time including #DB, #HV, #SX, etc.

There is no architectural protection against being in the handler for an exception and receiving the same exception again. For example, a #SX could occur during the #SX handler. When exceptionsuse the IST mechanism to establish a stack, taking the same exception again can lead to an infinite loop as the exception frame on the stack gets overwritten."

published February 2021

AMD proposes several new processor instructions that would replace the old SYSCALL and SYSRET instructions with new "Enhanced SYSCALL" (ESC) instruction and new "Enhanced SYSRET" behavior when SYSRET is called following a "Enhanced SYSCALL". The "AMD Supervisor Entry Extensions" document is just 18 pages long, 13 if you discount the "License Agreement" drivel and the front cover on the first five pages. It has some psudo-code illustrating how their new enhanced SYSCALL would work.

AMD's short proposal keeps things pretty much as they are, they are essentially trying to put lipstick on a pig.

Intel's proposal is vastly different. Their Flexible Return and Event Delivery (FRED) document is a whopping 46 pages long. The "Introduction" describes their much more complex approach this way:

"The FRED architecture defines simple new transitions that change privilege level (ring transitions). The FRED architecture was designed with the following goals:

- Improve overall performance and response time by replacing event delivery through the interrupt descriptor table (IDT event delivery) and event return by the IRET instruction with lower latency transitions.

- Improve software robustness by ensuring that event delivery establishes the full supervisor context and that event return establishes the full user context

The new transitions defined by the FRED architecture are FRED event delivery and, for returning from events, two FRED return instructions."

These are very different approaches. AMD is proposing a band-aid, Intel wants a complete makeover.

Linux Torvalds' Expert Opinion[edit]

Linus Torvalds at LinuxCon Europe. Photo credit: Krd, CC-BY-SA

You will never have to think about how low-level system calls are handled if you write some simple program in C++ using the Qt toolkit or some cross-platform mobile-style application in Dart using Google's Flutter toolkit. Linus Torvalds, on the other hand, has to deal with low-level instructions and things like Intel's AVX512 mess all the time. He is therefore in a good position to comment on the new proposals from Intel and AMD.

Linus Torvalds had this to say about it in a message on his favorite, and probably also only, web forum:

"The AMD version is essentially "Fix known bugs in the exception handling definition".

The Intel version is basically "Yeah, the protected mode 80286 exception handling was bad, then 386 made it odder with the 32-bit extensions, and then syscall/sysenter made everything worse, and then the x86-64 extensions introduced even more problems. So let's add a mode bit where all the crap goes away".

In contrast, the AMD one is basically a minimal effort to fix actual fundamental problems with all that legacy-induced crap that are nasty to work around and that have caused issues.

For a short list of "IDT exception handling problems", I'll just enumerate some of them:

(a) IDT itself is a horrible nasty format and you shouldn't have to parse memory in odd ways to handle exceptions. It was fundamentally bad from the 80286 beginnings, it got a tiny bit harder to parse for 32-bit, and it arguably got much worse in x86-64.

(b) %rsp not being restored properly by return-to-user mode.

(c) delayed debug traps into supervisor mode

(d) several bad exception nesting problems (NMI, machine checks and STI-shadow handling at the very least)

(e) various atomicity problems with gsbase (swapgs) and stack pointer switching

(f) several different exception stack layouts, and literally hundreds of different entrypoints for exceptions, interrupts and system calls (and that's not even counting the call gates that nobody should use in the first place).

But I suspect I forgot some.

AMD aims to fix the outright historical bugs (b)-(e), but keeps things otherwise the same (ie it adds a bit more code to the microcode that basically replaces some of the horrible hacks you currently have to do - badly - in system software).

The Intel one is the recognition that there was more wrong than just the outright bugs that had to be worked around by system software, and introduces a "fixed exceptions" model, which basically fixes all of the above.

Both are valid on their own, and they are actually fairly independent. Honestly, the AMD paper looks like a quick "we haven't even finished thinking all the details through, but we know these parts were broken, so we might as well release this".

I don't know how long it has been brewing, but judging by the "TBD" things in that paper, I think it's a "early rough draft"."

reply by Linus Torvalds, March 13, 2021

Torvalds went on to say that while AMD's proposed "quick fix" would be easier to implement for him and others operating system vendors, it's not ideal in the long run. Intel's proposal throws the entire existing interrupt descriptor table (IDT) delivery system under the bus so it can be replaced with what they call a new "FRED event delivery" system. Torvalds believes this is a better long-term solution.

"The Intel FRED stuff has several years of background, and honestly, I think is the right thing to do. It really relegates the whole IDT to a "we don't even use this at all, unless you have legacy segment selectors". Good riddance to a truly horrid thing that goes back to a truly disgusting CPU architecture: the 80286.

I hope both vendors end up doing both of those things. The AMD version is better if you are an OS vendor that wants to change as little as humanly possible at the OS level, and get rid of known problems. Think "legacy OS that we can't really make big changes to".

But I think the Intel version is better if you think that x86-64 should actually survive longer-term, and you actually want to improve exception handling and speed things up (the "F" historically stood for "Fast", I'm not sure why they've apparently renamed it "Flexible").

Honestly, I like the AMD model of "release early for discussion". It's what they did with the original x86-64 (aka "amd64") spec. I think their paper is very much in line with that original spec, both in that "early release about a non-final this is what we want to do" and a "minimal changes to existing hardware".

But I do think that the Intel approach is actually the better fix to some of the nastiest parts of the whole architecture.

If you ever expect to eventually live in a world where the old 32-bit legacy isn't really relevant any more (we can already pretty much already discount the x86 16-bit modes), the Fred approach actually takes you a good step in that direction, I think.

Anyway, I hope this won't be a fragmentation issue, but realistically, because of how little changes the AMD model does to the legacy mode, and how independent the whole Fred exception model is from the legacy mode, even if you don't end up in that "everybody does both", I suspect it's not exceptionally (pun intended) hard to just support both.

After all, Fred is very clearly defined to have an entirely new model, and any OS vendor that goes that way will still have to support the legacy exception model for older CPU's.

The point being that the Fred exception handling is much simpler, but it's entirely separate code and logic, explicitly bolted to the side in the hope that the original code and logic can be removed entirely some day.

In contrast, the AMD model is meant to very explicitly interface with existing code, and just allow people to avoid the fragile (and sometimes expensive) hacks and workarounds they already have.

So they actually have very little overlap - both conceptually and from a "implementation and use" standpoint."

reply by Linus Torvalds, March 13, 2021

Torvalds is very clearly in favor of fixing the underlying problems in the x86-64 architecture instead of a "quick fix" - even though it would be much easier to adjust the Linux kernel so it supports the new "quick fix" CPU instructions AMD proposes.

User-Space And Kernel-Space[edit]

Some of those who replied in the thread about the new interrupt handling proposals raised concerns that Intel's proposed "Flexible Return and Event Delivery" system lacks any support for ring 1 and 2.

"FRED event delivery can effect a transition from ring 3 to ring 0, but is also used to deliver events incident to ring 0. One FRED instruction (ERETU) effects a return from ring 0 to ring 3, while the other (ERETS) returns while remaining in ring 0."

Linus Torvalds could, apparently, not case less about ring 1 and 2.

"Andrey (andrey.semashev.delete@this.gmail.com) on March 14, 2021 4:15 pm wrote:

> In the FRED model, I'm kind of alerted that they are dropping rings 1 and 2, basically leaving

> kernel and userspace with nothing in between. This fits Linux and Windows needs, but I wonder

> if that would be limiting for other designs, like microkernels? What was the intended use case

> of the middle rings if not some semi-privileged stuff like some drivers and system services?

The middle rings were always completely and entirely useless.

There is basically absolutely no difference between rings 1-3: the paging model doesn't make a difference, and the only support for it is in some truly esoteric segment and call gate usage that no sane person should ever do, and that just means that your OS will never be relevant on anything else than x86, and will perform badly even there.

You don't need them. Not even for microkernels. If you think you do, you've done something truly horrendously wrong to begin with.

In fact, the main reason I like Fred is that it really is a big step towards not only getting rid of a broken IDT ring transition model, but eventually getting rid of segmentation entirely. All that remains is basically the gsbase/fsbase for variations of thread-local data (which is useful in ways segments are not)."

reply by Linus Torvalds, March 14, 2021

Torvalds went on to say that ring segmentation was a bad idea to begin with.

"But segments, the way they were introduced in the 80286? Total and utter garbage. If you think you need them or want them (other than for legacy retro-computing), I suggest you need to really re-examine your life choices.

(Side note: in many ways x86 supervisor/CPL0 mode is a "middle ring", since you have modes beyond it with higher access rights - SMM (ugh) and virtualization. So it's not that you don't necessarily want more than two security domains, but the way 80286 did it with four rings and segmentation was always the wrong model entirely.

The whole "one ring for applications, one ring for device drivers, one ring for system software, and one ring to rule them all" thing was just truly horrendously bad Tolkien fan-fiction."

reply by Linus Torvalds, March 14, 2021

Some of our readers may be able to guess where the discussion went from there now that the M-word, "microkernel", had been mentioned.

Torvalds On The Merits Of Microkernels[edit]

Linux is one big monolithic kernel. Without elaborating on the technical merits of microkernels and monolithic kernels and their strengths and weaknesses, it is suffice to say that one does not simply tell the Linux kernels author and architect that microkernels are somehow superior. "Doug S", who preferred to post anonymously, made that mistake.

"Doug S (foo.delete@this.bar.bar) on March 17, 2021 9:30 am wrote:

> While everyone agrees that the performance hit of microkernels is real, for applications where

> security is paramount it is totally worth it.

Bah, you're just parroting the usual party line that had absolutely no basis in reality and when you look into the details, doesn't actually hold up.

It's all theory and handwaving and just repeating the same old FUD that was never actually really relevant.

If you actually want security - and this isn't some theory, this is how people actually do it - you implement physically separate systems, and you make the secure side much simpler, and you do code review like there is no tomorrow. Ie automotive, automation, things like that."

reply by Linus Torvalds, March 17, 2021

"Doug S" was not the only microkernel-fanatic who conveniently put his head on the chopping-block that day.

"dmcq (dmcq.delete@this.fano.co.uk) on March 17, 2021 11:15 am wrote:

> So the question becomes - can the performance of IPC be dramatically improved?

The answer is simple: "no".

It's just fundamentally more expensive to send some kind of message than it is to just share the data structures and use the cache coherency.

The only way "IPC" is competitive is if you define IPC as "memory mapping your data structures in shared memory".

We call that "monolithic kernel" exactly because it shares that data address space.

In other words, your whole argument hinges on unreality. In a world that doesn't exist, and no reasonable person expects to exist or even has a realistic plan for, in any kind of reasonable timeframe, your argument simply makes absolutely zero sense at all.

Please make a distinction between reality and your dream world, and just admit it, and stop making arguments that make no sense in other peoples realities, ok?

The name of the forum is "real world tech". Not "my private fantasy tech"."

reply by Linus Torvalds, March 17, 2021

"AMD Supervisor Entry Extensions" Or "Flexible Return and Event Delivery (FRED)"[edit]

While the pros and cons of Intel and AMD's respective proposals for interrupt and event handling in future processors are worthy of discussion, it's in reality mostly up to Intel. They are the bigger and more powerful corporation. It is more likely than not that future processors from Intel will use their proposed Flexible Return and Event Delivery system. Their next generation processors won't, it will take years not months before consumer CPUs have the FRED technology. Remember, the above-mentioned technical document was published earlier this month. Things do not magically go from the drawing-board to store-shelves overnight.

Intel isn't going to just hand the FRED technology over to AMD and help them implement it. We will likely see both move forward with their own proposals. Intel will have FRED and AMD will have Supervisor Entry Extensions until AMD, inevitably, adopts FRED or some form of it years down the line.

We sincerely hope you enjoyed this article and the legendary quotes from Linus Torvalds in it.

Enable comment auto-refresher

Intgr

Permalink |

Intgr