Btrfs Was Not Meant For RAID5 or 6

The mkfs.btrfs utility in the btrfs-progs package will now warn you if you try to create a RAID5 or RAID6 array using btrfs as of the newly released btrfs-progs 5.11. The manual page has long warned that such btrfs arrays are "considered experimental and shouldn’t be employed for production use".

written by 윤채경 (Yoon Chae-kyung) 2021-03-11 - last edited 2021-03-11. © CC BY

Don't do this unless you're using old very dead HDDs.

Using RAID is a very good idea. Not because it is the same as having a backup, it isn't. RAID is a good idea because it saves downtime. If one drive fails in a RAID6 array created with mdadm then you're good until a second drive dies and you can simply order a replacement. And there won't be any downtime when the replacement arrives, you can use a RAID6 array normally while it is rebuilding. If a single drive fails and you happen to have a recent backup then you're without a drive while you wait for a replacement and you'll have to restore the contents of it from backup when the replacement arrives. This is why it is a good idea to have everything in a RAID setup.

RAID1, a RAID mode that mirrors two drives, is a good solution for root file systems where binaries and libraries are stored. RAID6 is a good solution for storage drives if you happen to have 5 or more. RAID5 shouldn't really be used by anyone, ever, unless you have 3 identical disks and you can't afford two more (or your motherboard or case limits the number of drives you can have).

RAID-arrays are best made using mdadm on Linux machines. mdadm is very well supported, very mature and you can restore mdadm arrays using any box that happens to be able to connect the drives you are using if you motherboard dies due to a bad capacitor or something like that.

The Btrfs file-system lets you create RAID arrays directly, without a separate layer beneath it. The mdadm way creates a array block device that can be used to create a XFS or ext4 file system; btrfs lets you skip mdadm and create RAID arrays that are managed and controlled by btrfs.

The Btrfs wiki has an entry for RAID56. It has long said that:

"the parity RAID code is not suitable for any system which might encounter unplanned shutdowns (power failure, kernel lock-up), and it should not be considered production-ready."

The mkfs.btrfs manual page has also warned against btrfs RAID5 and RAID6 arrays for years and years:

"RAID5/6 is still considered experimental and shouldn’t be employed for production use."

People have, for some reason, created RAID5 and RAID6 arrays with mkfs.btrfs anyway. Those people have, in cases where unforeseen things like power outages, kernel crashes and other unclean shutdowns have taken place, learned that it is not a good idea the hard way.

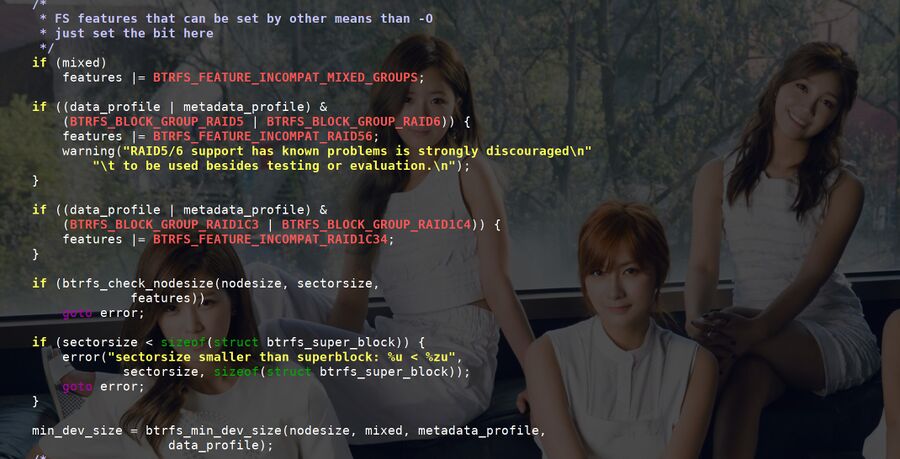

btrfs-progs version 5.11, released on March 5th, will now warn users who try to create RAID5 and RAID6 arrays using mkfs.btrfs. The warning, which reads:

"RAID5/6 support has known problems is strongly discouraged to be used besides testing or evaluation."

mkfs/main.c from btrfs-progs-v5.11.

We encourage our readers to take the numerous warnings against btrfs RAID5/6 arrays seriously. It is, of course, possible to create standard Linux software RAID arrays with mdadm and put btrfs on top of those if you really want to use btrfs. XFS is also a great file system for larger RAID arrays that will mostly store large files. There is one very annoying pitfall worth being very aware of before deploying XFS anywhere: You can't shrink XFS file systems. You can shrink btrfs, ext4 and a few other file systems and that may be an advantage down the road.

If you already have a btrfs RAID5 or RAID6 array for some reason then you may want to consider re-creating it as a mdadm array. That is not as easy as it sounds, you will have to copy everything onto another disk or array and re-create a new array from scratch. It may be worth if you don't want to lose all your data if there is a power outage or you get a kernel panic or something else bad happens.

The source for btrfs 5.11, and other versions, can be found at mirrors.edge.kernel.org/pub/linux/kernel/people/kdave/btrfs-progs/.

Enable comment auto-refresher

Anonymous (9b205871fa)

Permalink |

Anonymous (9b2057a55e)

Permalink |

Anonymous (9b2057a55e)

Permalink |

Anonymous (62c7bc3089)

Permalink |