New stable kernels: 5.3.6, 4.19.79 and 4.14.149 are now available

The new stable-series kernels have a small fix for a performance regression which has radically decreased performance on multi-core systems running lots and lots virtual machines since January 2017. The Chinese found eight times higher performance on their system when the patch which caused the regression was reverted.

Stable kernels 5.3.6, 4.19.79 and 4.14.149 all have the important VM fix since both 5-series and 4.19 and 4.14 series kernels were released after 2017 which makes them affected. A 5.2.21 kernel with a blank changelog was also released at the same time for some unknown reason. The 5.2 kernel series reached it's end of life as of 5.2.20 so that was probably a mistake.

Wanpeng Li from Chinese Tencent had this to say about the reversion of a patch which made virtual machines perform up to eight times worse than they should for years:

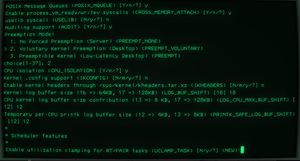

"Revert "locking/pvqspinlock: Don't wait if vCPU is preempted"

commit 89340d0935c9296c7b8222b6eab30e67cb57ab82 upstream.

This patch reverts commit 75437bb304b20 (locking/pvqspinlock: Don't wait if vCPU is preempted). A large performance regression was caused by this commit. on over-subscription scenarios.

The test was run on a Xeon Skylake box, 2 sockets, 40 cores, 80 threads, with three VMs of 80 vCPUs each. The score of ebizzy -M is reduced from 13000-14000 records/s to 1700-1800 records/s:

Host Guest score

vanilla w/o kvm optimizations upstream 1700-1800 records/s

vanilla w/o kvm optimizations revert 13000-14000 records/s

vanilla w/ kvm optimizations upstream 4500-5000 records/s

vanilla w/ kvm optimizations revert 14000-15500 records/s

Exit from aggressive wait-early mechanism can result in premature yield and extra scheduling latency.

Actually, only 6% of wait_early events are caused by vcpu_is_preempted() being true. However, when one vCPU voluntarily releases its vCPU, all the subsequently waiters in the queue will do the same and the cascading effect leads to bad performance.

kvm optimizations:

- [1] commit d73eb57b80b (KVM: Boost vCPUs that are delivering interrupts)

- [2] commit 266e85a5ec9 (KVM: X86: Boost queue head vCPU to mitigate lock waiter preemption)"

Most of us do not have 40 core 80 thread machines to play with. Those who do should probably upgrade their kernel if the current one is a 4.14, 4.19 or 5-series kernel. We have not tested how this plays out on some puny 6-core machine running a handful of virtual machines - but we suspect that there is a notable and undesired performance-penalty there too.

Looking elsewhere, there are a lot of EROFS fixes in the new 4.19.70 and 5.3.6 kernels. This read-only filesystem for mobile and embedded systems is still stuck in the kernels "staging" area despite attempts to get it out of there. Huawei's Gao Xiang got a lot of bug-fixes into these kernels and the mainline git tree too this round so there is a change that it will make it into the main branch when kernel 5.5 is released. The 5.4 release candidates have, for now, kept it contained in the staging area.

There are, of course, a very long list of other changes in kernel 5.3.6, 4.19.79 and 4.14.149. None of the other changes stood out as particularly interesting.

published 2019-10-12 - last edited 2019-10-15

Enable comment auto-refresher