Webalizer

| |

| Original author(s) | Bradford L. Barrett |

|---|---|

| Initial release | 1997 |

| Stable release | 2.23-08

/ August 26, 2013 |

| Written in | C |

| Operating system | Linux, Cross-platform |

| Available in | Over 30 languages |

| Type | Web Traffic Analytics |

| License | GNU GPL |

| Website | www.webalizer.org |

Webalizer is a primarily Apache log file analyzer that creates a simple report with some small graphs and a high-level overview of page-views, unique visits, files, kilobytes transferred and the total amounts of file hits. It's fast, and it supports a history file that allows you to simply throw old logs away once webalizer is done with them. It does have some shortcomings like the inability to separate browsers user-agent strings by device and operating system.

Webalizer has not been updated since 2013. It works fine, but don't expect it to get any new features beyond what the current version has.

|

Webalizer

|

|

Features[edit]

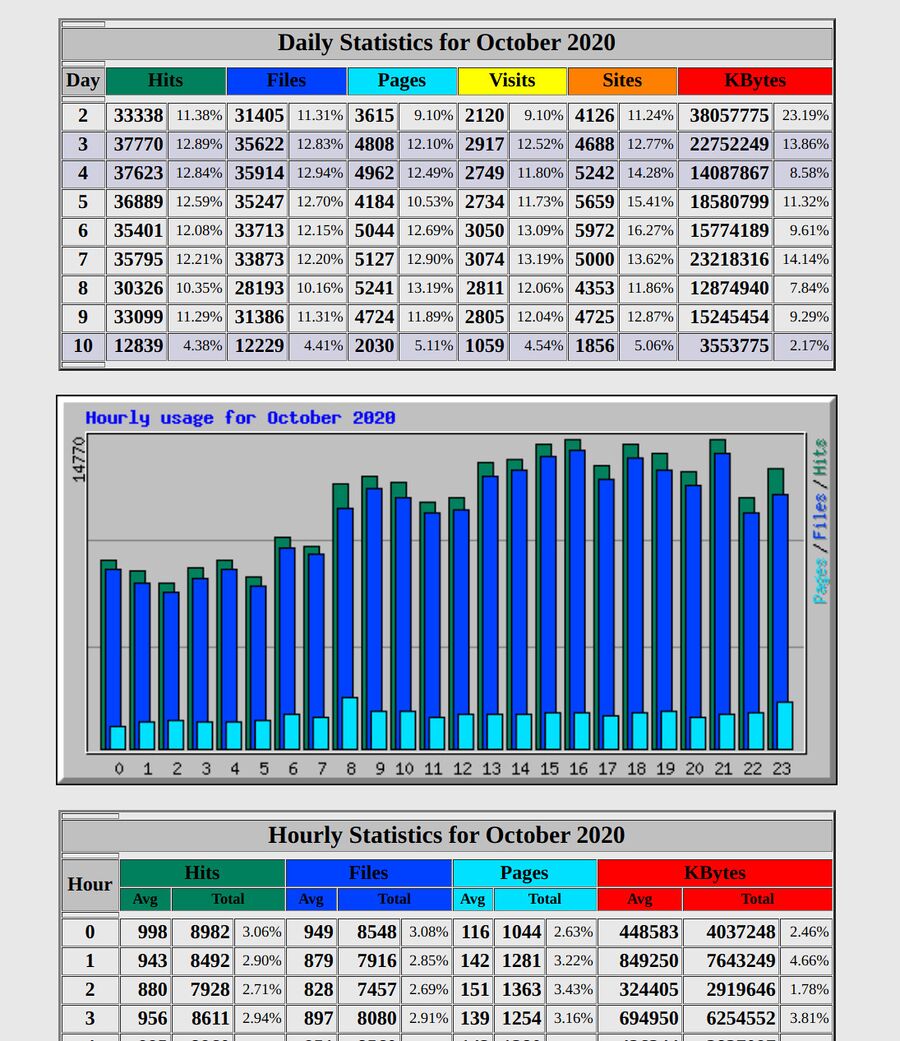

Webalizer can create a simple HTML page with some graphs and some data about your website's traffic.

It's main areas are:

- Monthly Statistics (no graphs, just numbers)

- Daily Statistics (with graph) with hits, files, pages, visits, sites and KBytes. It doesn't say how many pages a single visitor viewed or things like that (you can just divide pages by visits to know) but it does give a nice overview.

- Hourly Statistics (with a graph). The statistics it shows you are per-hour for the entire month.

- Top XXX URLs (configurable how many)

- Top XXX URLs by KBytes

- Entry and Exit pages

- Top XXX referrers

- Top search strings (if you configured it so it knows about search-engines)

- Top XXX user-agent strings (you have to manually configure it if you want them grouped)

- Top XXX of total countries (this works fine if you configure it to use a GeoIP database)

Webalizer does give an overview of the most important things you need to know about a site if, and only if, you done a tad too much configuration compared to what should be required. Put simply, there are quite a few things that requires manual configuration. Bots, web spiders and other things that shouldn't be included as regular visitors have to be manually excluded. User-agents are not grouped by default. They can be grouped, but you have to list all the strings you want to be grouped and you can only use one string. That makes it hard to put those using Chrome on Windows in one group and those who use Chrome on Android in another group. You do have one string, so you could put everyone using Windows in one group and those using Android in another; but then you can't tell how many Windows-users use what browser. It would be nice if Webalizer could group user-agents using two strings but it can't.

An example of statistics created by Webalizer.

Webalizer is better than nothing, and it's something that does work. And it's fairly fast thanks to it's support for history files. You can parse a lot with Webalizer, re-set the log and keep the historical statistics. This is also nice from a privacy-perspective, you can run Webalizer every 6 hours and eradicate the old log while keeping the actual statistics from prior periods intact.

One big disadvantage of using Webalizer is that it's something that doesn't "just work" unless you spend perhaps too much time configuring it. It will just work for ages once that's done.

Webalizer lets you ignore user-agents, URLs/"pages" and file-types so they don't show up or count as regular page-views (for example, you don't want load.php to be counted as a "page" if you use MediaWiki like this site does).

Webalizer is a nice web log analyzer, but it does require quite a lot of configuration to make the statistics it presents meaningful and how you should configure it will depend on what content management system(s) you use.

Installation And Configuration[edit]

All the distributions seem to have webalizer included as a package with that name. The bigger problem you will have once you have it installed will be configuring it.

You should acquire the geodb-latest.tgz file from ftp://ftp.mrunix.net/pub/webalizer/geodb/geodb-latest.tgz before you configure it. Web browsers like Chromium are removing support for the ftp:// protocol, just use wget in a terminals if your web browser can't ftp://.

webalizer lets you run it with -c configurationfile. You can easily run it with five different configuration files for five different sites.

The bare minimum a webalizer log file should have is

HostName yoursite.tld

LogFile access_log

OutputDir /home/httpd/vhosts/yoursite/httpdocs/webstat/

HistoryName /home/httpd/vhosts/yoursite/statistics/logs/webalizer.hist

IncrementalName /home/httpd/vhosts/yoursite/statistics/logs/webalizer.current

Incremental yes

UseHTTPS yes

DNSChildren 0

TopURLs 50

TopReferrers 50

TopAgents 40

TopSites 0

TopKSites 0

AllSearchStr yes

Quiet yes

FoldSeqErr yes

webalizer needs to be able to write to the OutputDir and the HistoryName and IncrementalName files.

TopSites and TopKSites statistics are only interesting if you want to make one statistics page for multiple sites.

You will want to extract the geodb-latest.tgz mentioned earlier and place somewhere webalizer can read and tell webalizer to use it in the configuration file:

GeoDB yes

GeoDBDatabase /home/httpd/webalizer/GeoDB.dat

You will likely also want quite a few IgnoreURL directives like

IgnoreURL /w/

And some PageType options listing file-types that count as pages:

PageType htm*

PageType cgi

PageType php

PageType shtml

Search engines are only recognized as referrers if they are listed. So you need a long list of those:

SearchEngine 348north.com search=

SearchEngine abcsearch.com terms=

SearchEngine alltheweb.com q=

Any website that's been around for a few months get lots and lots of web crawlers visiting. Most of them are worthless, but that's another story. You will want a long list of IgnoreAgent directives so those are ignored from the statistics:

IgnoreAgent 360Spider

IgnoreAgent FemtosearchBot

IgnoreAgent www.semrush.com/bot

IgnoreAgent www.bing.com/bingbot

IgnoreAgent www.sogou.com

..and lastly, if you want to group web browser user-agents together so multiple versions of say Mozilla Firefox are just listed as Firefox, you'll need a lot of options with both GroupAgent and HideAgent. If you add a GroupAgent without a following HideAgent you'll get one entry with the user-agents grouped and another with them individually.

Those entries can look like

GroupAgent "Mozilla/5.0 (X11; CrOS x86_64" ChromiumOS

HideAgent Mozilla/5.0 (X11; CrOS x86_64

GroupAgent "Win64; x64; rv:" Firefox on Windows

HideAgent Win64; x64; rv:

See Webalizer/Configuration file example for an example of a configuration file with very long lists of SearchEngine and IgnoreAgent and other configuration directives a webalizer configuration file needs in order to get meaningful statistics from it.

Verdict And Conclusion[edit]

Webalizer can be used to create some basic statistics about a website's traffic using nothing but a web servers log files. It can't produce as much information about a sites visitors as tools using JavaScript can, so the information it can present is somewhat limited. That may or may not be a good thing depending on how you look at it. That it produces what it does using nothing but logs can be an advantage, you don't have to violate people's privacy or run JavaScript in their web browsers.

A big and clear down-side to using Webalizer is that it needs to be properly configured in order to produce anything that's useful. If you, for example, don't give it a increasingly long list of user-agents to ignore with IgnoreAgent directives you'll get statistics that would show double or triple your actual traffic if you run a small website.

If you're willing to spend some time configuring Webalizer then it's kind-of fine as an alternative to things like Google Analytics. You'll get less information, but you will get the most important details like how many people visited your site, how many pages they viewed, what pages are most visited, when people visit and a few other statistics.

Alternatives[edit]

- Analog is a similar log analyzer. It's far worse, it doesn't support partial updates and it's not better than Webalizer.

- Open Web Analytics is not a log analyzer. It is a more fully featured web analytics server written in PHP. It can provide more detailed information than what logs can but it's also far more privacy-invasive to your sites visitors as it uses cookies and, depending how you configure and deploy it, client-side JavaScript.

Links[edit]

The webalizer website is at webalizer.org. It hasn't been updated in a decade, but it's there and it works.

Enable comment auto-refresher